In the rapidly evolving world of artificial intelligence and natural language processing, the advent of language models has been nothing short of revolutionary. As these models grow increasingly sophisticated, the demand for intuitive and accessible tools to harness their power rises. Enter LangChain, a cutting-edge platform designed to democratize the integration and utilization of large language models (LLMs) for developers and enthusiasts alike. In this comprehensive beginner’s guide, we’ll embark on a journey to explore the intricacies of LangChain and unveil the potential it holds for the future of language models.

Whether you’re a budding developer, a technology enthusiast, or simply curious about the potential of language models, this guide promises to be your quintessential companion on the path to mastering LangChain. Let’s dive into the world of LangSmith, LangServe, and the transformative power of LLMs within the LangChain ecosystem.

1. “Introduction to LangChain: Unveiling the Future of Language Models”

In the ever-evolving landscape of artificial intelligence, LangChain has emerged as a pioneering framework set to redefine how we interact with and leverage the power of language models. LangChain is not just a tool but an ecosystem that seamlessly integrates the capabilities of large language models (LLMs) into a variety of applications, enabling developers and businesses to harness the full potential of AI in their projects.

At its core, LangChain serves as a conduit, connecting the dots between complex LLMs and the end-users who seek to employ them in real-world scenarios. Through its modular design, developers can easily incorporate advanced language understanding and generation into their applications without the steep learning curve that traditionally accompanies AI integrations. This ease of use is paramount in democratizing access to cutting-edge technology and fostering a more inclusive AI landscape.

With components like LangSmith, users are equipped with powerful, customizable tools that streamline the process of editing and refining text generated by language models. This is particularly invaluable for content creators and editors who strive for perfection in their written materials. LangSmith acts as a digital assistant, suggesting enhancements and ensuring that the output adheres to a high standard of quality and coherence.

Another integral part of the LangChain suite is LangServe, which provides a robust infrastructure for deploying language models. This enables the scalable and efficient delivery of AI-powered services, ensuring that organizations of all sizes can benefit from the advancements in natural language processing and machine learning.

As you embark on your journey with LangChain, you’re not simply adopting a new technology; you’re stepping into the future of language models. Whether you’re a developer keen on integrating LLMs into your software, a business looking to improve customer interactions, or a researcher exploring the frontiers of AI, LangChain offers the tools and flexibility needed to achieve your goals.

2. “Getting Started with LangChain: Your First Steps to Mastering LLM Integration”

Embarking on the journey of mastering LangChain, an open-source framework designed to integrate Large Language Models (LLMs) like GPT-3 into applications, can seem daunting at first glance. However, with a step-by-step approach, beginners can quickly become adept at leveraging the power of LLMs for various tasks. Here’s a guide to take your first steps into the world of LangChain.

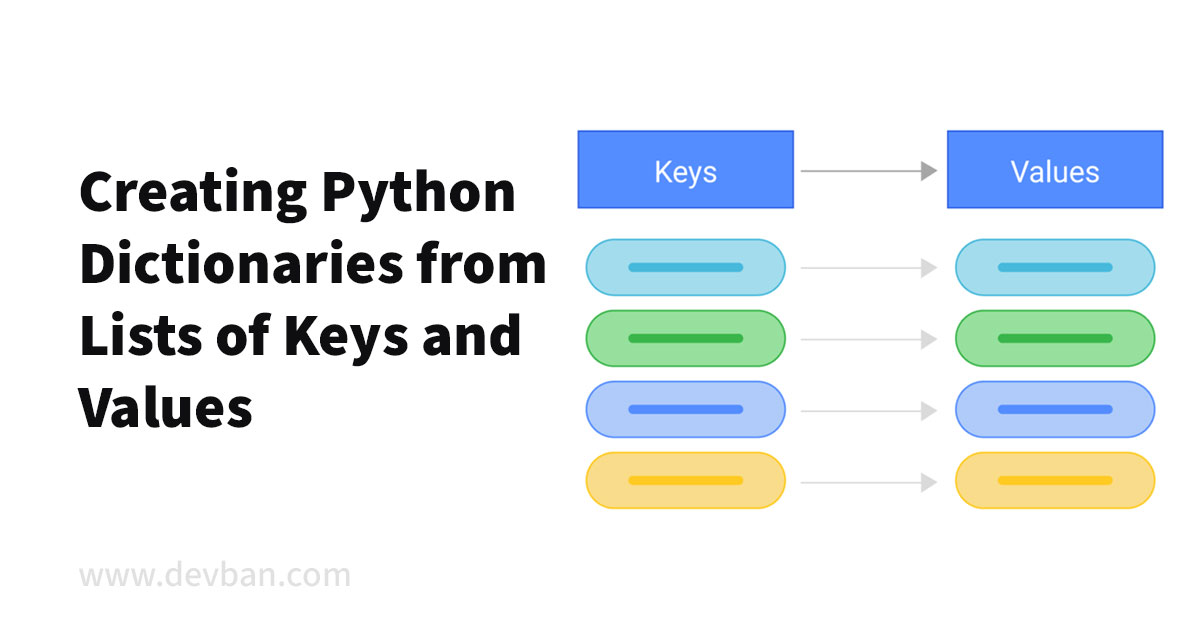

To get started, you’ll need a basic understanding of programming concepts and familiarity with Python, as LangChain is built on this language. Once you have that foundation set, your first order of business is to install LangChain. You can do this by running the pip install langchain command, which fetches the latest version of LangChain from PyPI, Python’s package index.

Once installed, dive into exploring the LangChain modules like LangSmith and LangServe, which offer tools for crafting intricate LLM-powered applications. LangSmith is a component of LangChain that provides a simple interface for text generation and manipulation using LLMs. It acts as a smithy where you can forge and refine your language-based creations. On the other hand, LangServe is a server module that facilitates the deployment of LLMs as web services, making it easier to integrate these models into web applications.

As a beginner, it’s essential to understand the core concepts of how LLMs work within the LangChain framework. LLMs are powerful tools for natural language processing tasks, and LangChain provides a set of abstractions that make it easier to work with these models. By using LangChain, you can abstract away the complexities of directly interfacing with LLMs and focus on the creative aspects of building your application.

To get hands-on experience, start by creating simple projects. For instance, you could use LangChain to build a chatbot that leverages the power of LLMs to understand and respond to user inputs. By experimenting with different configurations and parameters, you’ll gain a better understanding of how LangChain interacts with LLMs, and how to tweak performance to suit your needs.

Documentation is key when learning any new technology, and LangChain is no exception. Make use of the extensive documentation available to learn about the different modules and functionalities. The documentation often includes examples that can serve as a starting point for your projects.

Community support is another critical aspect of mastering LangChain. Engage with the LangChain community through forums, GitHub, or other social platforms where developers share their experiences and solutions. This can be invaluable in overcoming hurdles you might encounter as you learn.

Remember, the journey to mastering LangChain and LLM integration is a marathon, not a sprint. Take your time to understand the basics, experiment with small projects, and gradually scale up. With patience and practice, you’ll become proficient at using LangChain to harness the capabilities of Large Language Models, paving the way for innovative and intelligent applications that can understand and process human language with remarkable efficiency.

3. “Exploring LangChain’s Core Components: LangSmith, LangServe, and More”

When embarking on a journey with LangChain, it’s essential to familiarize yourself with its core components, each designed to harness the power of Large Language Models (LLMs) effectively. LangChain integrates several modules, but among the most critical are LangSmith and LangServe, both of which play pivotal roles in the framework’s functionality.

LangSmith is a key component of LangChain that acts as a builder for LLM applications. It allows developers to craft custom solutions by combining the capabilities of LLMs with external data sources and services. Think of LangSmith as a toolkit: it enables you to seamlessly integrate the generative prowess of language models with APIs, databases, and other systems, thereby extending the utility beyond mere text generation. This fusion creates a powerful synergy that can be tailored to a wide array of use cases, from automating customer service interactions to generating dynamic content.

LangServe, on the other hand, operates as a server for LLMs, providing an environment where language models can run efficiently. It’s designed to scale, manage, and deploy language models in a way that optimizes performance and accessibility. LangServe is essentially the backbone that supports the real-time interaction with LLMs, ensuring that applications built with LangSmith have the necessary infrastructure to deliver fast and reliable responses.

Beyond LangSmith and LangServe, LangChain comprises additional tools and libraries that further enhance the development experience. These tools often include functionalities for conversation handling, context management, and multi-modal applications that can interpret and generate not only text but also images and other forms of data.

When working with LangChain, the ability to create advanced applications becomes more accessible, thanks to its modular architecture. This architecture allows developers to plug in different components as needed, without having to rebuild from scratch every time. With LangSmith’s application-building capabilities and LangServe’s robust hosting environment, you can push the boundaries of what’s possible with LLMs.

As LangChain continues to evolve, it’s likely that we’ll see the introduction of more innovative components that will further simplify the process of integrating LLMs into diverse applications. Whether you’re a seasoned developer or new to the world of LLMs, LangChain offers a structured yet flexible approach to creating cutting-edge AI-powered solutions.

4. “LangSmith Unpacked: Crafting Custom Language Solutions with Ease”

LangSmith is a powerful tool within the LangChain ecosystem, specifically designed for crafting custom language solutions with relative ease. It functions as a forge for language models, allowing developers and businesses to tailor language AI to their specific use cases. Whether you are looking to create a sophisticated chatbot, a dynamic content generator, or an intelligent search assistant, LangSmith equips you with the necessary tools to fine-tune your language model to meet your unique requirements.

The beauty of LangSmith lies in its user-friendly interface and its ability to abstract away the complexities often associated with customizing LLMs. Users can leverage pre-built components and templates within LangSmith to jumpstart their development process, reducing the time and technical know-how typically needed to deploy custom language solutions.

Moreover, LangSmith is seamlessly integrated with LangServe, another LangChain service that acts as a server for managing and deploying language models. This integration ensures that once you’ve crafted your language solution with LangSmith, deploying and scaling it becomes a breeze. LangServe handles the heavy lifting of maintaining and serving your LLM, allowing you to focus on refining your application and enhancing user experience.

As you begin your journey with LangChain and explore the capabilities of LangSmith, keep in mind that the true power of these tools lies in their flexibility and the creative potential they unlock. With LangSmith, you’re not just using a language model; you’re sculpting it into a form that serves your vision, making your application not just functional but also unique and tailored to your audience.

In summary, LangSmith unpacked within the LangChain framework offers a promising avenue for both developers and non-technical users to harness the capabilities of LLMs. This ease of crafting custom language solutions opens up a world of possibilities for personalized and intelligent language-based applications. Whether you’re a seasoned AI practitioner or a curious beginner, diving into LangSmith can be the first step toward realizing the potential of language AI in your domain.

5. “LangServe Explained: Streamlining Language Model Deployment for Beginners”

To begin with, let’s unpack what LangServe is and how it fits within the LangChain ecosystem. LangServe is essentially a server that facilitates communication between your application and the LLM of your choice, such as OpenAI’s GPT-3 or other compatible models. It acts as an intermediary, managing the intricacies of model hosting, scaling, and maintenance so you don’t have to. By abstracting these details, LangServe enables even those with minimal technical expertise to leverage the power of LLMs in their projects.

For beginners, one of the most significant advantages of using LangServe is the reduction in initial setup time and complexity. Instead of spending hours or days configuring servers, dealing with API keys, and understanding model parameters, LangServe offers a user-friendly interface through which these tasks can be completed with a few clicks. This allows developers and content creators to concentrate on the creative aspects of their projects, such as crafting prompts and interpreting model outputs.

Additionally, LangServe provides a layer of abstraction over the LLMs, which means that users can switch between different models without altering their application’s codebase. This flexibility is invaluable for experimentation and for finding the model that best suits your needs. Whether you’re using LangSmith to refine your prompts or testing various models to achieve optimal results, LangServe ensures a smooth transition between different LLMs.

Another crucial aspect of LangServe is its scalability. As your application grows and the demand for language model interactions increases, LangServe ensures that the backend can handle this increased load without any manual intervention. This scalability is a boon for beginners who might not have the expertise to manage infrastructure at scale.

Incorporating LangServe into your LangChain workflow is straightforward. Once you’ve decided to use LangServe, you can configure your application to send prompts to the server, which then forwards them to the LLM. The language model processes these prompts and returns the generated text back through LangServe to your application. This round-trip is handled seamlessly, with latency and throughput optimized to ensure a smooth user experience.

In summary, LangServe is an indispensable component of the LangChain suite, particularly for beginners eager to harness the capabilities of LLMs. It provides a hassle-free deployment experience, enabling users to quickly set up and scale their language model applications without requiring deep technical knowledge. By streamlining the integration of LLMs, LangServe democratizes access to advanced AI technology and allows for greater innovation and creativity in a wide array of projects. Whether you’re a developer, a researcher, or a hobbyist, LangServe can help you embark on your LLM journey with confidence and ease.

Embarking on the journey through the LangChain ecosystem can be an exciting adventure for those new to the world of Large Language Models (LLMs). As you begin, understanding the core components and services like LangSmith and LangServe will help you navigate the ecosystem with confidence. Here’s a beginner’s roadmap to help you get started.

First, let’s clarify what LangChain is. LangChain is an open-source framework designed to make it easier for developers to build applications on top of LLMs. These models, powered by advanced machine learning algorithms, are capable of understanding and generating human-like text, making them a powerful tool across various industries.

When you’re ready to dive in, start by familiarizing yourself with the LangChain documentation. The documentation is your compass in the LangChain ecosystem, providing detailed guidance on setup, features, and how to integrate LLMs into your projects.

Next, explore LangSmith, a key component of the LangChain ecosystem. LangSmith is a toolkit that allows developers to refine and enhance the capabilities of their LLMs. It includes a suite of tools and functionalities to streamline the development process. By leveraging LangSmith, you can tailor the LLM’s output to better suit your application’s needs, ensuring higher quality and more relevant results.

LangServe, another vital part of the LangChain ecosystem, is a service that facilitates the deployment of LLMs, making them accessible through web APIs. This means you can easily integrate LLMs into your applications without the heavy lifting of managing the underlying infrastructure. Understanding how to work with LangServe will be crucial as you aim to scale your applications and provide seamless access to the capabilities of LLMs.

As a beginner, it’s important to engage with the LangChain community. This vibrant community of developers and enthusiasts is a treasure trove of knowledge and experience. Participating in forums, attending webinars, and contributing to discussions can accelerate your learning curve and provide insights that are not readily available in documentation.

To put your knowledge into practice, start with small projects. Create simple applications using the LangChain framework to get a feel for how the components fit together. Experiment with different LLMs, play with LangSmith’s tools, and deploy a test service using LangServe. Real-world experience is the best teacher, and these initial projects will build your confidence in navigating the LangChain ecosystem.

Remember, as with any new technology, there may be a learning curve. Don’t be discouraged by initial challenges. Keep experimenting, learning, and reaching out to the community for support. With persistence and curiosity, you’ll soon find yourself adept at leveraging the full potential of LangChain and its associated services.

In conclusion, your journey through the LangChain ecosystem begins with understanding the framework, exploring its core components like LangSmith and LangServe, and engaging with the community. By following this beginner’s roadmap, you’ll not only navigate the ecosystem effectively but also unlock the transformative power of LLMs for your projects.

7. “The ABCs of LLMs: Understanding Language Models within LangChain”

LangChain is an innovative platform designed to leverage the power of Large Language Models (LLMs) to create applications that can understand, process, and generate human-like text. As a beginner diving into the world of LangChain, it’s crucial to understand the ABCs of LLMs, which are the backbone of the LangChain ecosystem.

Large Language Models are a type of artificial intelligence that have been trained on vast amounts of text data. They have the remarkable ability to understand context, generate coherent text, and even perform tasks like summarization, translation, and question-answering. LangChain harnesses these capabilities to enable developers to build applications that can interact with users in natural language.

The LangChain framework provides developers with tools like LangSmith and LangServe, which are designed to make working with LLMs more accessible and efficient. LangSmith is a toolkit within LangChain that helps developers craft and refine the behavior of LLMs to better suit their specific application needs. It acts as a blacksmith for language models, allowing you to ‘forge’ and ‘temper’ the AI’s responses for precision and relevance.

On the other hand, LangServe is a component of LangChain that offers a hosting and serving solution for LLMs. It simplifies the process of deploying language models, making it easier for developers to integrate LLM functionality into their applications without worrying about the complexities of infrastructure and scalability.

When starting with LangChain, it’s important to grasp the concept of ‘prompt engineering,’ which is the practice of designing prompts that elicit the desired response from an LLM. Effective prompt engineering can significantly enhance the performance of your LangChain applications, ensuring that the generated text is aligned with the application’s goals.

In summary, understanding the ABCs of LLMs within LangChain involves grasping how these sophisticated models process language, how tools like LangSmith and LangServe can assist in tailoring and deploying these models, and the importance of prompt engineering in eliciting accurate and useful responses. With this knowledge, you can begin to explore the potential of LangChain and leverage LLMs to create powerful language-based applications.

8. “Setting Up Your First LangChain Project: A Step-by-Step Guide for Newbies”

Embarking on your first LangChain project can be an exciting journey into the world of language models and AI-driven applications. LangChain, a flexible framework designed to simplify and expedite the development process, makes it relatively straightforward to get started. This step-by-step guide will walk newbies through setting up their inaugural LangChain project, ensuring they’re equipped to harness the power of Large Language Models (LLMs) like GPT-3 or its successors.

Step 1: Prerequisites

Before diving into LangChain, ensure you have a basic understanding of Python programming, as LangChain is a Python library. Additionally, you’ll need Python installed on your computer, preferably the latest version for optimal compatibility.

Step 2: Installation

Install LangChain using pip, Python’s package installer. Open your terminal or command prompt and enter the following command:

pip install langchainThis command fetches the latest version of LangChain and installs it along with its dependencies.

Step 3: Configuration

To interact with Large Language Models (LLMs) like OpenAI’s GPT-3, you’ll need to obtain API keys from the respective providers. LangChain supports integration with multiple LLMs, so choose your preferred LLM and configure your LangChain environment with the API key according to the documentation provided.

Step 4: Initialize Your Project

Create a new directory for your project and navigate to it. Initialize a new Python file – this will be the main file where you’ll write your LangChain code. You can name it anything, for example, my_first_langchain.py.

Step 5: Import LangChain

At the top of your new Python file, import the necessary LangChain classes. For starters, you might import the core LangChain class and any specific components you plan to use, such as LangSmith for crafting queries or LangServe for setting up a server:

from langchain.llms import OpenAI

from langchain.chains import LangChainStep 6: Create an Instance

Create an instance of the LangChain class, passing in the API key and any other configurations specific to your chosen LLM:

llm = OpenAI(api_key='your_api_key_here')

my_chain = LangChain(llm=llm)Step 7: Build Your First Chain

LangChain operates on the principle of “chains,” which are sequences of operations you want the LLM to perform. Define your first chain, which could be as simple as asking the LLM to generate a text response to a prompt.

response = my_chain.run(input_text='Hello, world!')

print(response)Step 8: Execute and Iterate

Run your Python file to execute your LangChain code. If everything is set up correctly, you should see a response from the LLM printed in your terminal. Experiment with different inputs and chain configurations to get a feel for how LangChain works.

python my_first_langchain.pyStep 9: Further Exploration

With your first project set up, you’re well on your way to exploring the full potential of LangChain. Delve into the LangChain documentation to discover advanced features like context management, conditional logic, and integrations with other tools and APIs.

By following these steps, you’ve successfully set up your first LangChain project and taken your initial steps into the world of LLMs. Remember that like any powerful tool, LangChain’s capabilities grow with familiarity and practice, so don’t hesitate to experiment and build more complex projects as you become more comfortable with the framework.

9. “Optimizing Performance: Tips and Tricks for LangChain Beginners”

When diving into the world of LangChain, it’s essential to ensure that you’re getting the most out of this powerful tool. LangChain is a modular framework for building applications on top of large language models (LLMs) like GPT-3, and with the right optimization strategies, you can enhance its performance significantly. Here are some tips and tricks tailored for LangChain beginners looking to optimize their experience:

1. Understand the Core Components: Before you start optimizing, familiarize yourself with the core components of LangChain, such as LangSmith and LangServe. LangSmith is built for creating and managing writing with LLMs, while LangServe facilitates serving LLMs over an API. Knowing how these components interact will help you optimize your application’s performance.

2. Efficient Query Design: When interacting with LLMs, the design of your prompts can significantly impact performance. Ensure that your prompts are clear, concise, and structured in a way that guides the model towards providing the desired output. This not only improves response quality but can also reduce the computational resources needed for each query.

3. Cache Responses: Implement a caching strategy to store responses from frequently asked queries. This will help you avoid redundant requests to the LLM, which can save on cost and reduce latency, thereby speeding up your LangChain application.

4. Batch Requests: When dealing with multiple queries, consider batching them if possible. This can reduce the overhead of sending individual requests and can be more efficient, allowing LangChain to handle multiple inputs and outputs in one go.

5. Monitor and Analyze Performance: Utilize monitoring tools to track your application’s performance. This data can help you identify bottlenecks or inefficiencies within your LangChain implementation. Analyzing this information will guide you on where to focus your optimization efforts.

6. Manage API Rate Limits: Be mindful of the rate limits imposed by LLM APIs. Efficiently manage your calls to ensure you’re not hitting these limits, which can lead to decreased performance or additional costs. This may involve implementing smart queuing systems or adjusting your application’s usage patterns.

7. Asynchronous Processing: For non-time-sensitive tasks, consider using asynchronous processing. This way, your application doesn’t need to wait for a response from the LLM before moving on to other tasks, thereby improving overall throughput and user experience.

8. Optimize for Cost: When optimizing for performance, also keep an eye on cost. Sometimes, the most performant solution may not be the most cost-effective. Balance the two by choosing the right LLM tier for your needs and adjusting based on usage patterns and budget constraints.

9. Stay Updated: The field of AI and LLMs is rapidly evolving, and so are tools like LangChain. Stay updated with the latest features, best practices, and optimizations by regularly checking the official documentation and engaging with the community.

10. Experiment and Iterate: Finally, don’t be afraid to experiment with different configurations and approaches. Performance optimization is often an iterative process, and what works for one LangChain application may not work for another. Keep testing and refining your strategies to find the sweet spot for your specific use case.

By leveraging these tips and tricks, beginners can optimize their use of LangChain, creating applications that are not only powerful and efficient but also cost-effective. Remember, the key to mastering LangChain is a blend of technical know-how and continuous experimentation.

10. “Troubleshooting Common LangChain Issues: A Primer for Smooth Operation”

Navigating the world of LangChain, particularly when leveraging its tools like LangSmith and LangServe, can be an exciting journey into the realm of large language models (LLMs). However, beginners may occasionally hit roadblocks that can hinder smooth operation. Here’s a primer for troubleshooting some of the most common issues you may encounter while working with LangChain.

Firstly, if you’re experiencing installation issues, it’s essential to ensure that your system meets the prerequisites for running LangChain. Verify that you have the correct version of Python installed, as LangChain has specific version requirements for optimal performance. Additionally, ensure that pip, Python’s package installer, is up to date to avoid compatibility issues when installing LangChain.

In cases where LangChain is installed but not functioning as expected, it’s crucial to check the configuration files. Misconfiguration can lead to unexpected behavior, so review the documentation provided by LangChain to ensure that your settings align with the required parameters for LangSmith or LangServe.

For those working with the LLMs, API connectivity issues are a common stumbling block. If LangChain is unable to connect to the LLM, double-check your internet connection and API keys. Invalid or expired API keys will prevent LangChain from interfacing with the LLM, so regenerating or updating your keys may be necessary.

Another frequent issue is related to resource allocation. LangChain, particularly when interfacing with LLMs, can be resource-intensive. If you encounter slow performance or crashes, monitor your system’s resource usage. Upgrading your hardware or optimizing LangChain’s resource consumption settings might be required for a smoother experience.

If you’re using LangServe and face difficulties with server setup or deployment, consult the error logs for specific messages that can guide you to the root of the problem. LangServe’s documentation can be an invaluable resource for understanding common server errors and their solutions.

Lastly, the LangChain community is an excellent resource for troubleshooting. Engaging with other users on forums and discussion platforms can provide insights into common issues and their resolutions. The collaborative nature of the LangChain ecosystem means that solutions are often shared and discussed openly, so don’t hesitate to reach out for help.

By familiarizing yourself with these troubleshooting techniques, you can ensure that your experience with LangChain, LangSmith, and LangServe is as smooth and productive as possible. Remember, patience and persistence are key when working with cutting-edge technologies like LLMs, and the rewards for overcoming these hurdles are well worth the effort.

11. “Expanding Your Horizons: Advanced Features of LangChain for Enthusiasts”

Once you’ve mastered the basics of LangChain, it’s time to elevate your experience by exploring its advanced features. LangChain, with its suite of tools such as LangSmith and LangServe, is designed to cater not just to beginners but also to enthusiasts who are ready to delve deeper into the realm of Large Language Models (LLMs).

LangSmith is an advanced LangChain tool that helps you refine and polish your language models. It allows for a higher level of customization and control over the fine-tuning process, enabling you to tailor the LLM to specific tasks or domains. By leveraging LangSmith, you can experiment with various parameters and training data to create a model that resonates with your unique requirements, providing a much more personalized AI experience.

LangServe, on the other hand, is a powerful feature that facilitates the deployment of your trained LLMs. It serves as a bridge between your models and the applications you wish to enhance with AI capabilities. Whether you’re looking to integrate intelligent features into a web service, an app, or any other software, LangServe provides a seamless way to make your models accessible and operational.

For enthusiasts looking to push the boundaries, LangChain’s API integration capabilities are a game-changer. By utilizing APIs, you can connect your LLMs with various data sources and third-party applications, expanding the scope of your projects. This opens up a world of possibilities, from automating complex workflows to creating sophisticated chatbots or virtual assistants.

Moreover, LangChain’s support for multiple languages means you can venture into the realm of multilingual LLMs. This is particularly valuable for global applications or services that cater to diverse user bases. Understanding and leveraging this feature can dramatically increase the reach and impact of your AI-driven solutions.

As you explore these advanced features, remember to keep track of the latest updates and community contributions to LangChain. The platform is constantly evolving, with new tools and features being added to enhance its capabilities. By staying informed, you can ensure that you’re always leveraging the full potential of LangChain and staying ahead in the rapidly advancing field of AI and LLMs.

In summary, LangChain’s advanced features like LangSmith and LangServe, along with API integration and multilingual support, provide enthusiasts with the tools to expand their horizons and develop cutting-edge AI solutions. By diving into these features, you can transform your understanding of LLMs into practical, innovative applications that go beyond the basics.

12. “LangChain Community and Support: Resources for Continuous Learning”

Embarking on the journey of mastering LangChain can be an exciting adventure, and the community and support available make it a collaborative and continuous learning process. The LangChain community is a burgeoning group of enthusiasts, developers, and experts who are passionate about leveraging Language Model (LLM) capabilities in novel and innovative ways. Engaging with this community can significantly enhance your understanding and skills with LangChain, LangSmith, and LangServe.

One of the most valuable resources for newcomers is the official LangChain documentation and forums. These platforms offer a wealth of information, tutorials, and best practices that can help you get started with LangChain. They are designed to be accessible to beginners, providing clear guidance on setting up your environment, understanding the core concepts of LLMs, and starting your first project.

Another key component of the LangChain community is the GitHub repository. By visiting the LangChain GitHub page, you can access the source code, report issues, contribute to the project, and stay updated with the latest developments. This is also a great place to review contributions from other users, which can be incredibly insightful and educational.

Social media and professional networking sites like Twitter, LinkedIn, and Discord also host vibrant communities where LangChain enthusiasts gather to share their experiences, ask questions, and offer support. These platforms are excellent for real-time interaction and can be a great way to stay connected with the latest LangChain trends and use cases.

In addition to these online resources, various workshops, webinars, and meetups are organized by the LangChain community. Attending these events can provide you with hands-on experience and the opportunity to learn directly from LangChain experts. These gatherings are also a fantastic way to network with peers and forge connections that can be beneficial for collaborative projects or career advancement.

Finally, the ecosystem of LangChain is complemented by a range of third-party blogs, forums, and online courses that offer tutorials and deep dives into specific aspects of LangChain, LangSmith, and LangServe. Leveraging these resources can help you build a strong foundation and keep your skills sharp as you progress from a beginner to a more advanced user.

Continuous learning is key when it comes to LangChain and LLM technologies, and the support and resources provided by the community are indispensable. By actively participating and taking advantage of the myriad of learning materials available, you’ll be well on your way to becoming proficient with LangChain and contributing to this exciting field.

In conclusion, our journey through the world of LangChain has equipped you with the knowledge and tools to dive confidently into the realm of language models. From understanding the innovative potential of LangChain in our introduction to mastering the first steps of LLM integration, we have covered substantial ground. Through exploring LangChain’s core components, such as LangSmith and LangServe, you now have a solid foundation for crafting custom language solutions and streamlining model deployment with ease.

The roadmap provided for navigating the LangChain ecosystem has set you on the path to becoming proficient in LLMs, ensuring you are well-prepared to tackle your first LangChain project. With our step-by-step guide, even complete newbies can set up and optimize their projects, gaining insights into tips and tricks that enhance performance and learning how to troubleshoot common issues for smooth operation.

As you expand your horizons, remember that the advanced features of LangChain are waiting for you to explore, promising to unlock even greater capabilities as your skills progress. Moreover, the vibrant LangChain community and the wealth of support resources available are there to foster continuous learning and collaboration.

Whether you are a developer, a language enthusiast, or simply curious about the potential of LLMs, LangChain, with its LangSmith and LangServe components, offers an accessible and powerful platform to innovate and excel in the field of language model technology. Embrace the future of language models with LangChain, and join the growing number of enthusiasts who are shaping the way we interact with and utilize language in our digital world.

Leave a Comment